1 安装Hive2.3

- 上传

apache-hive-2.3.0-bin.tar.gz到/opt/software目录下,并解压到/opt/module

tar -zxvf apache-hive-2.3.6-bin.tar.gz -C /opt/module/- 修改

apache-hive-2.3.6-bin名称为hive

mv apache-hive-2.3.6-bin hive- 将Mysql 的

mysql-connector-java-5.1.27-bin.jar拷贝到/opt/module/hive/lib/

cp /opt/software/mysql-libs/mysql-connector-java-5.1.27/mysql-connector-java-5.1.27-bin.jar /opt/module/hive/lib/- 在

/opt/module/hive/conf路径上,创建hive-site.xml文件

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop102:3306/metastore?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>datanucleus.schema.autoCreateAll</name>

<value>true</value>

</property>

<!--安装完tez后添加这个-->

<property>

<name>hive.execution.engine</name>

<value>tez</value>

</property>

</configuration>- 启动hive(

/opt/module/hive)

bin/hive2 Hive集成引擎Tez

- Tez 是一个Hive 的运行引擎,性能优于MR。

- 用Hive 直接编写MR 程序,假设有四个有依赖关系的MR 作业,上图中,绿色是ReduceTask,云状表示写屏蔽,需要将中间结果持久化写到HDFS。

- Tez 可以将多个有依赖的作业转换为一个作业,这样只需写一次HDFS,且中间节点较少,从而大大提升作业的计算性能。

2.1 安装Tez

- 拷贝

apache-tez-0.9.1-bin.tar.gz到hadoop102 的/opt/software目录 - 将

apache-tez-0.9.1-bin.tar.gz上传到HDFS 的/tez目录下。(方便集群节点共享)

hadoop fs -mkdir /tezhadoop fs -put /opt/software/apache-tez-0.9.1-bin.tar.gz/ /tez- 解压缩

apache-tez-0.9.1-bin.tar.gz

tar -zxvf apache-tez-0.9.1-bin.tar.gz -C /opt/module- 修改名称 (

/opt/module)

mv apache-tez-0.9.1-bin/ tez-0.9.12.2 集成Tez

- 进入到Hive 的配置目录:

/opt/module/hive/conf - 在Hive 的

/opt/module/hive/conf下面创建一个tez-site.xml文件

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>tez.lib.uris</name>

<value>${fs.defaultFS}/tez/apache-tez-0.9.1-bin.tar.gz</value>

</property>

<property>

<name>tez.use.cluster.hadoop-libs</name>

<value>true</value>

</property>

<property>

<name>tez.history.logging.service.class</name>

<value>org.apache.tez.dag.history.logging.ats.ATSHistoryLoggingService</value>

</property>

</configuration>- 在

hive-env.sh文件中==添加tez 环境变量配置和依赖包环境变量配置==

mv hive-env.sh.template hive-env.sh# Set HADOOP_HOME to point to a specific hadoop install directory

export HADOOP_HOME=/opt/module/hadoop-2.7.2

# Hive Configuration Directory can be controlled by:

export HIVE_CONF_DIR=/opt/module/hive/conf

# Folder containing extra libraries required for hive compilation/execution can be controlled by:

export TEZ_HOME=/opt/module/tez-0.9.1 #是你的tez 的解压目录

export TEZ_JARS=""

for jar in `ls $TEZ_HOME |grep jar`; do

export TEZ_JARS=$TEZ_JARS:$TEZ_HOME/$jar

done

for jar in `ls $TEZ_HOME/lib`; do

export TEZ_JARS=$TEZ_JARS:$TEZ_HOME/lib/$jar

done

export HIVE_AUX_JARS_PATH=/opt/module/hadoop-2.7.2/share/hadoop/common/hadoop-lzo-0.4.20.jar$TEZ_JARS- 在

hive-site.xml文件中添加如下配置,更改hive 计算引擎(步骤1.4已经添加)

<property>

<name>hive.execution.engine</name>

<value>tez</value>

</property>2.3 测试

- 在

/opt/module/hive目录下启动Hive

bin/hive- 创建表

create table student(

id int,

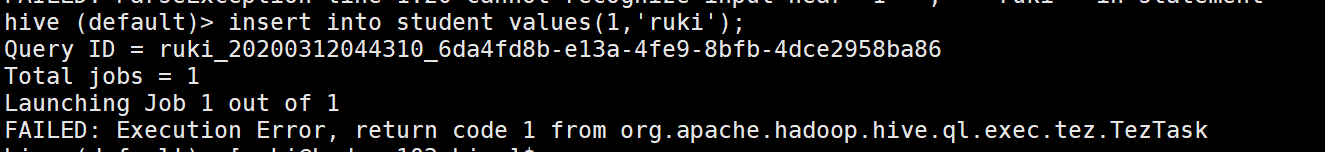

name string);- 插入数据(==我在这一步报错,解决方法详见2.4注意事项==)

insert into student values(1,"ruki");

- 查询一下没有报错表示成功了

2.4 注意事项

2.4.1 集成tez后,插入数据失败

- 运行Tez 时检查到用过多内存而被

NodeManager杀死进程问题:

Caused by: org.apache.tez.dag.api.SessionNotRunning: TezSession

has already shutdown. Application application_1546781144082_0005

failed 2 times due to AM Container for appattempt_1546781144082_0005_000002 exited with exitCode: -103

For more detailed output, check application tracking

page:http://hadoop103:8088/cluster/app/application_15467811440

82_0005Then, click on links to logs of each attempt.

Diagnostics: Container

[pid=11116,containerID=container_1546781144082_0005_02_000001]

is running beyond virtual memory limits. Current usage: 216.3 MB

of 1 GB physical memory used; 2.6 GB of 2.1 GB virtual memory used.

Killing container.- 这种问题是从机上运行的

Container试图使用过多的内存,而被NodeManagerkill 掉了。

[摘录] The NodeManager is killing your container. It sounds like

you are trying to use hadoop streaming which is running as a child

process of the map-reduce task. The NodeManager monitors the entire

process tree of the task and if it eats up more memory than the

maximum set in mapreduce.map.memory.mb or

mapreduce.reduce.memory.mb respectively, we would expect the

Nodemanager to kill the task, otherwise your task is stealing memory

belonging to other containers, which you don't want.2.4.2 解决方法

- 关掉虚拟内存检查,修改

yarn-site.xml

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>- 修改后一定要分发,并重新启动hadoop 集群。

xsync yarn-site.xml